Batch Management – Optimizing your batch flow

Running daily financial workflows is a complex and time-critical task. It is essential that every flow is optimized to perform efficiently, and dependencies are transparent for the business teams. When an incident has been encountered, the value-chain must be informed, and subsequent flows should be managed in a controlled and predictable way.

Typically, Investment Management Systems (IMS) have a powerful batch engine to facilitate front-to-back workflows, with integrations to different areas of the platform. To accommodate for limited scheduling abilities, the IMS is normally supported by a scheduling tool. Thus, implementing scheduling correctly may be the difference between a slow and rigid platform or a fast and responsive one. Leveraging the best of your investment management platform with a state-of-the-art scheduler may be a game changer for your business.

Integrating the Scheduler of Choice

It is possible to connect a scheduler to your IMS either through inserting records directly on the database or by parsing parameters to the executable. We will offer a brief overview of the pros and cons of either interface. Database integration requires Read, as well as full Insert, Update and Delete access to batch processing tables directly on the database. Using this method allows for full transparency and thus effective error-handling, but may breach corporate security policies, notably if the scheduler connects to external vendors. Scripting parameters to the executable has a more limited set of functionalities but may be faster to implement and may offer sufficient features.

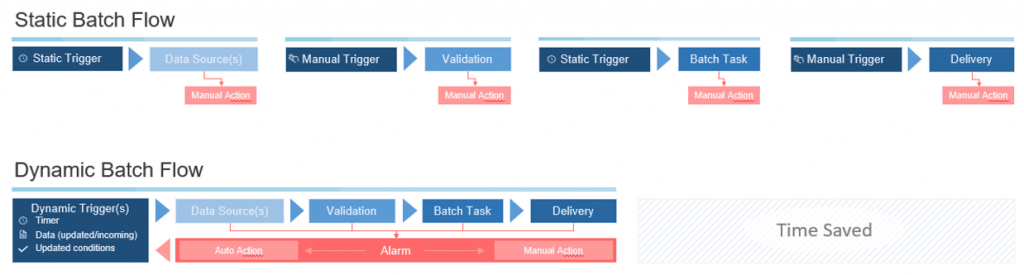

The Optimized Batch Flow

Whether choosing ActiveBatch, JAMS, Redwood, Flightplan or any other scheduling tool, a fully optimized batch flow has several distinct qualities:

- Full transparency

- Idle time minimized

- Only required jobs active

- Clearly defined error-handling

- Continuous monitoring and alerting

A successful implementation of the scheduler of choice aids in all the above objectives. Transparency of the batch flow, dependencies, ownership, and current status helps the entire value chain in performing and delivering on time. Well-structured batch scheduling utilizes most available resources on application servers. Thus, when all dependencies are met, the best practice is to send tasks for execution, in turn minimizing unnecessary idle time.

When the scheduler determines that a batch task should be queued for execution, problems may still arise. Broken formulas and functions may not yield the expected result, static data could be missing, or vendor formats might have changed. These are but a few examples of how an STP-flow may be broken and necessitate intervention. In these scenarios it is important that the following measures are taken:

A. Suspend child and dependent flows

B. Alert the impacted business team

C. Execute emergency procedures

With a good understanding of business flows, it is possible to limit the impact of the incident by suspending subsequent tasks. In the meantime, unrelated flows may proceed and provide business continuity. Primary owners should be notified, so they are able to investigate and possibly solve the incident, while secondary users are notified to brace for the fallout and delays. Finally, a well-designed dynamic scheduler will have predefined processes for what to do in case a batch flow breaks down. These include waiting, retrying, or running a secondary flow.

Reach out to Axssys Consulting to hear more about how we can help optimizing batch flows to perform at their best, implementing a dynamic scheduling tool with efficient error-handling and valuable alerts.

Blog author: Jakob Banning, Senior Manager, Axxsys Consulting

For more information about our services please contact info@axxsysconsulting.com